Introduction

See Contents

Artificial Intelligence (AI) has shifted from a futuristic concept to a powerful driver of transformation in modern healthcare. From analyzing complex medical data to assisting in surgeries, AI applications are significantly improving healthcare accessibility, efficiency, and outcomes. But as AI systems gain more influence in clinical decision-making, the importance of responsibility, ethics, and transparency grows exponentially.

Responsible AI in healthcare is not just about developing advanced algorithms—it’s about ensuring that these systems respect human values, support clinicians, protect patients, and operate under stringent ethical and regulatory guardrails.

The Ethical Imperative

In healthcare, every decision—automated or human-made—can have life-altering consequences. That’s why AI must be governed by ethical principles that prioritize the well-being of patients and uphold human dignity.

- Autonomy and Consent: Patients must understand and agree to the involvement of AI in their care.

- Clinician Authority: AI tools should support—not override—clinician expertise. Human oversight must always be retained.

- Non-maleficence and Beneficence: AI must minimize harm and promote patient benefit in every use case.

Even the most advanced systems can lead to distrust, misuse, and disparities without strong ethical foundations.

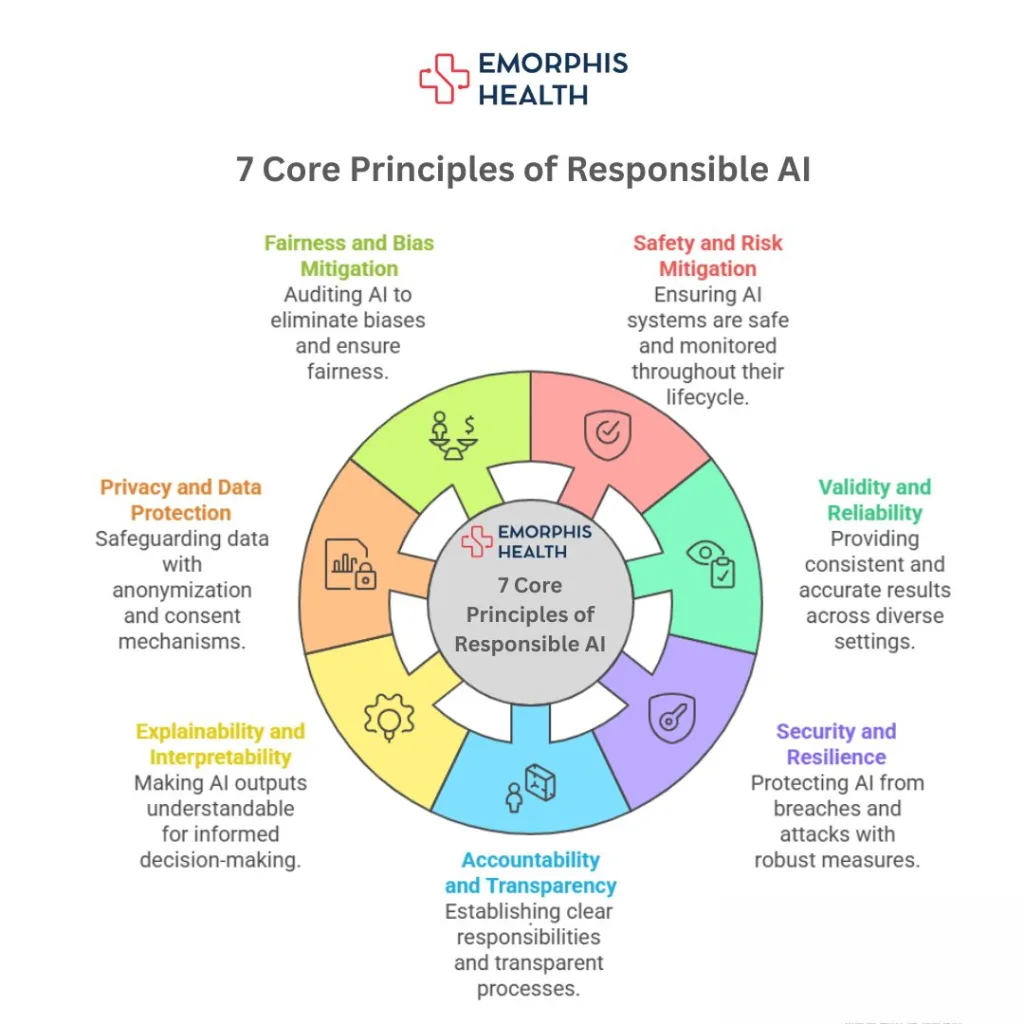

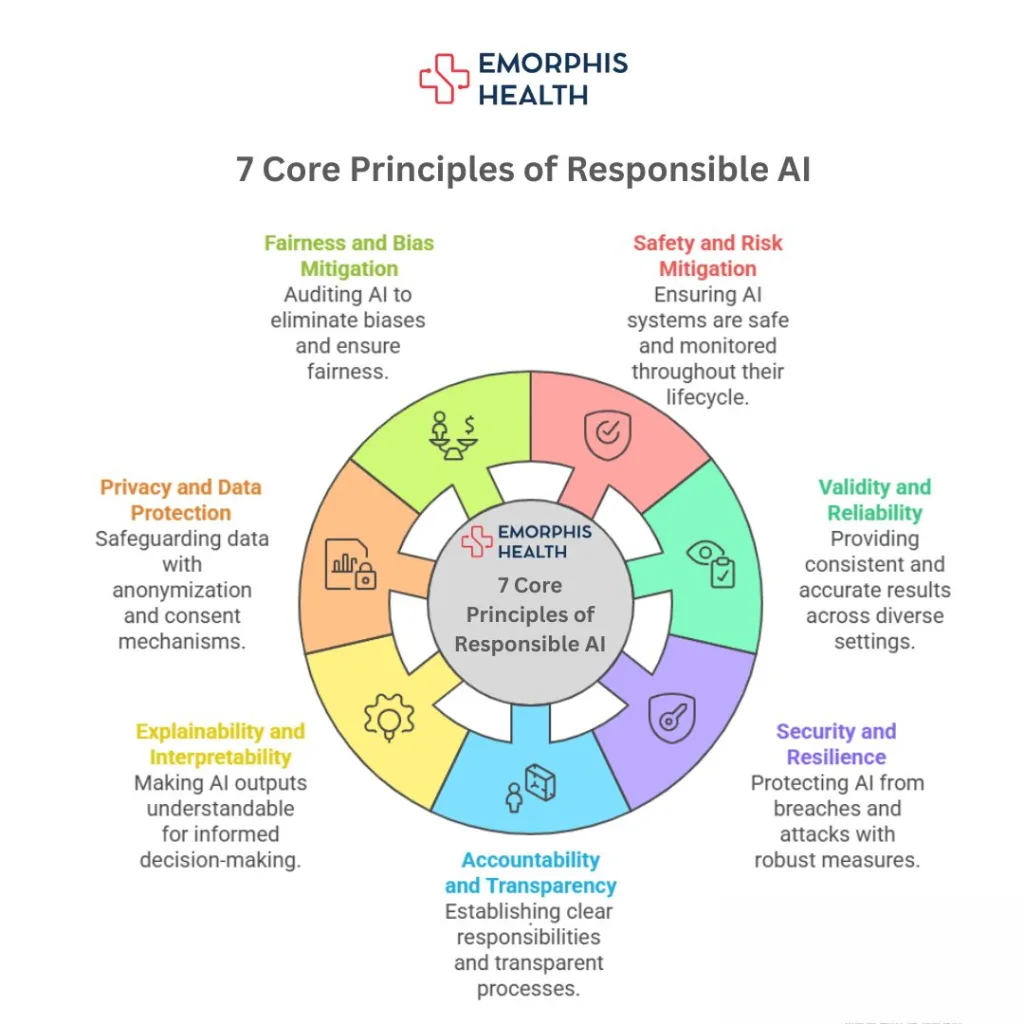

7 Core Principles of Responsible AI

a. Safety and Risk Mitigation

AI systems should undergo rigorous safety assessments during development and deployment. Fail-safes and continuous post-market surveillance must be part of the lifecycle.

b. Validity and Reliability

The system should consistently provide accurate and clinically relevant results across various environments, devices, and populations.

c. Security and Resilience

AI must be protected from breaches, tampering, and adversarial attacks. Secure architecture, encryption, and anomaly detection are vital.

d. Accountability and Transparency

There should be clear lines of responsibility—who created the model, who approved it, who uses it, and who answers when it fails. Transparency in design and usage is essential.

e. Explainability and Interpretability

Outputs must be understandable by healthcare providers. This helps in making informed decisions, especially in high-risk or legally sensitive scenarios.

f. Privacy and Data Protection

Data anonymization, consent mechanisms, and privacy-by-design frameworks should be non-negotiable components of AI development.

g. Fairness and Bias Mitigation

Continuous auditing must be conducted to eliminate algorithmic biases based on race, gender, age, or socio-economic background.

Practical Applications in Healthcare

AI is now applied across a wide spectrum of healthcare operations, often with astonishing results:

- Clinical Decision Support: AI assists physicians in selecting treatments, flagging potential complications, and avoiding adverse drug reactions.

- Medical Imaging Analysis: Deep learning algorithms detect tumors, fractures, and abnormalities in MRIs, CTs, and X-rays with high precision.

- Patient Triage: AI tools prioritize emergency patients based on severity and symptoms, saving valuable time in ER settings.

- Predictive Analytics: By analyzing past patient data, AI can predict sepsis onset, risk of hospital readmission, or likelihood of disease progression.

- Remote Patient Monitoring (RPM): Wearables and AI platforms track vitals, medication adherence, and lifestyle factors in real time.

- Healthcare Chatbots and Virtual Assistants: These help patients book appointments, understand symptoms, or follow post-discharge instructions.

- Operational Efficiency: AI optimizes staffing schedules, supply chain logistics, and patient flow through hospitals.

Emerging Solutions

- AI for Drug Discovery: Speeds up research timelines by identifying compounds and simulating molecular interactions.

- Digital Pathology: AI analyzes pathology slides, helping reduce diagnostic turnaround time and increase accuracy.

- Genomics and Precision Medicine: AI assists in identifying genetic mutations and tailoring therapies accordingly.

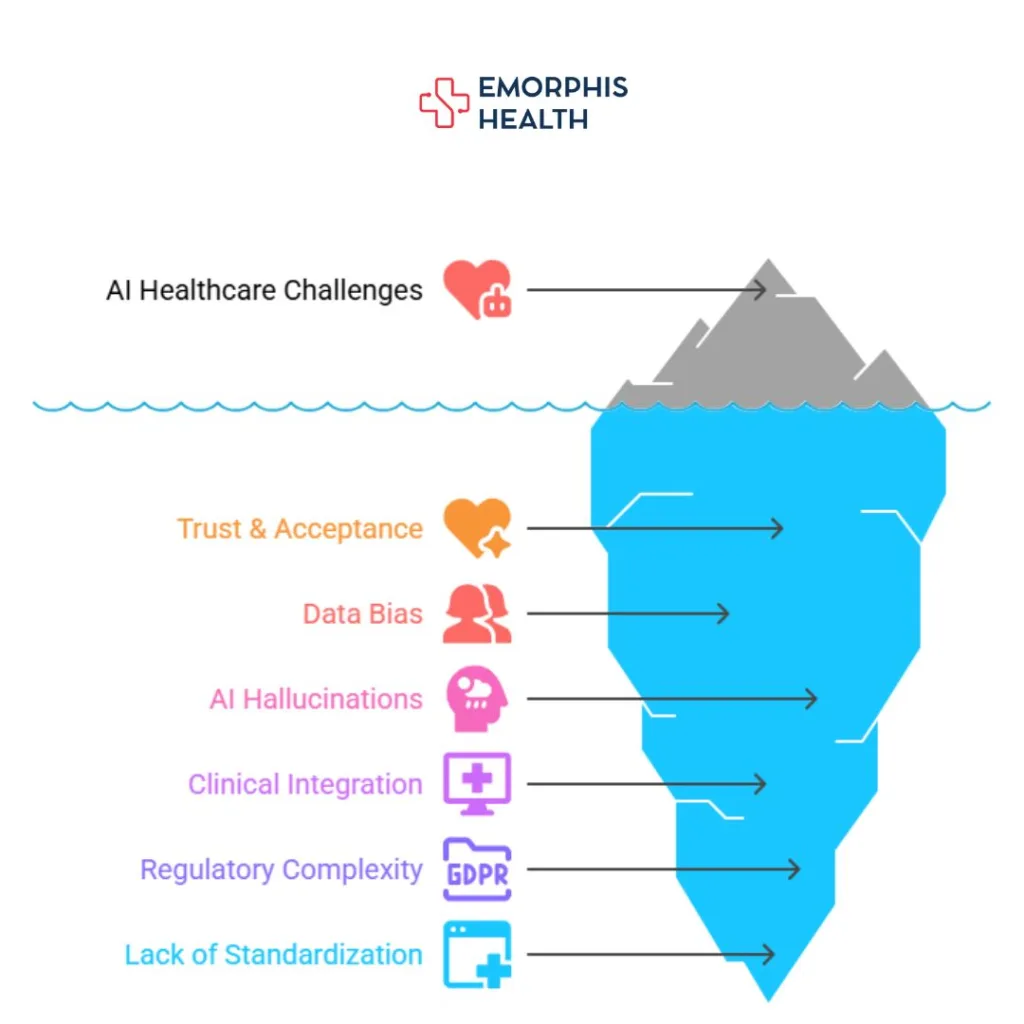

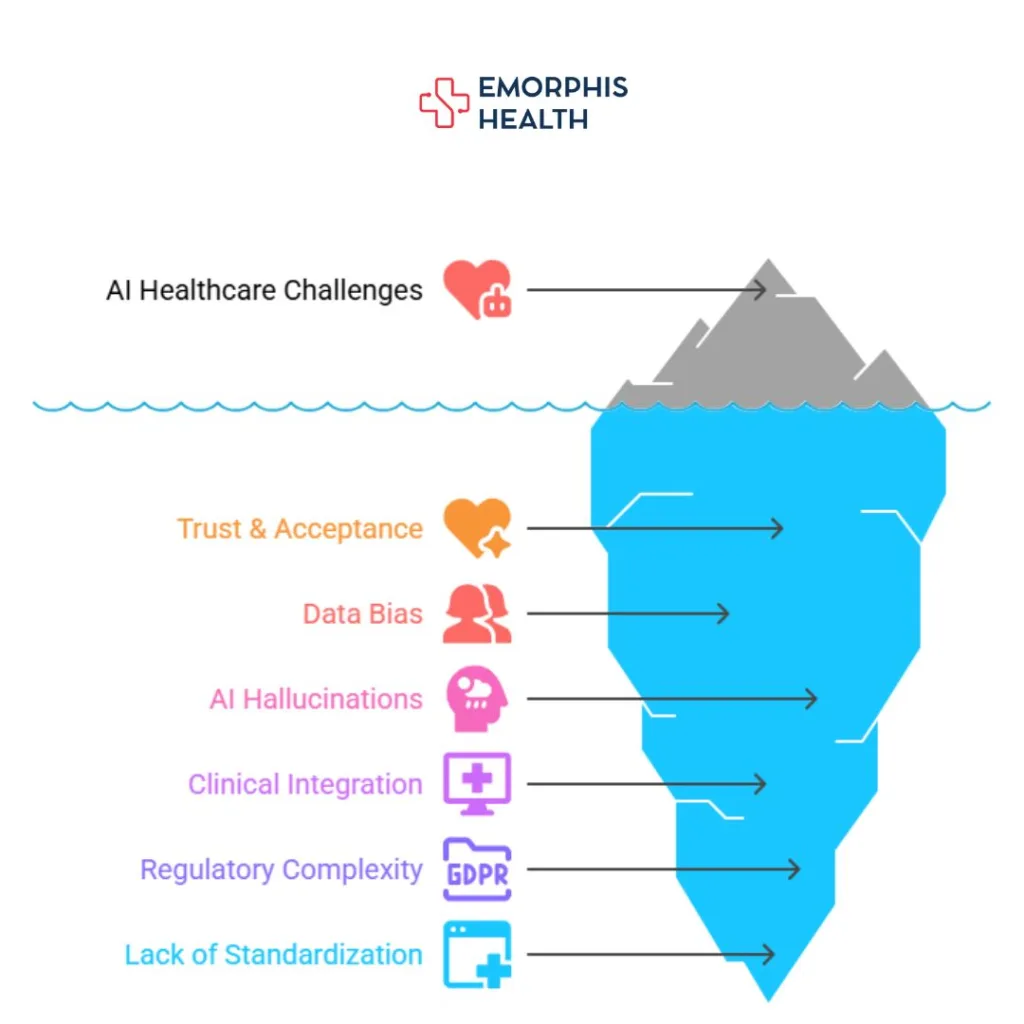

Challenges on the Ground

Despite significant progress, real-world deployment of AI in healthcare encounters several roadblocks:

- Trust and Acceptance: Patients and even clinicians often mistrust AI due to opaque decision-making processes and fear of dehumanizing care.

- Data Bias and Homogeneity: Many models are trained on data that lack ethnic, geographic, and gender diversity—leading to biased outputs.

- AI Hallucinations: Generative models can generate clinically inaccurate or fabricated responses. These must be mitigated with context-aware mechanisms like RAG (Retrieval-Augmented Generation).

- Clinical Integration: Many AI tools aren’t designed with real-world clinical workflows in mind, leading to usability issues and adoption hesitancy.

- Regulatory Complexity: Navigating FDA approvals, HIPAA compliance, and international data laws (like GDPR) remains daunting for developers.

- Lack of Standardization: No universal standards exist yet for training, benchmarking, or validating medical AI systems.

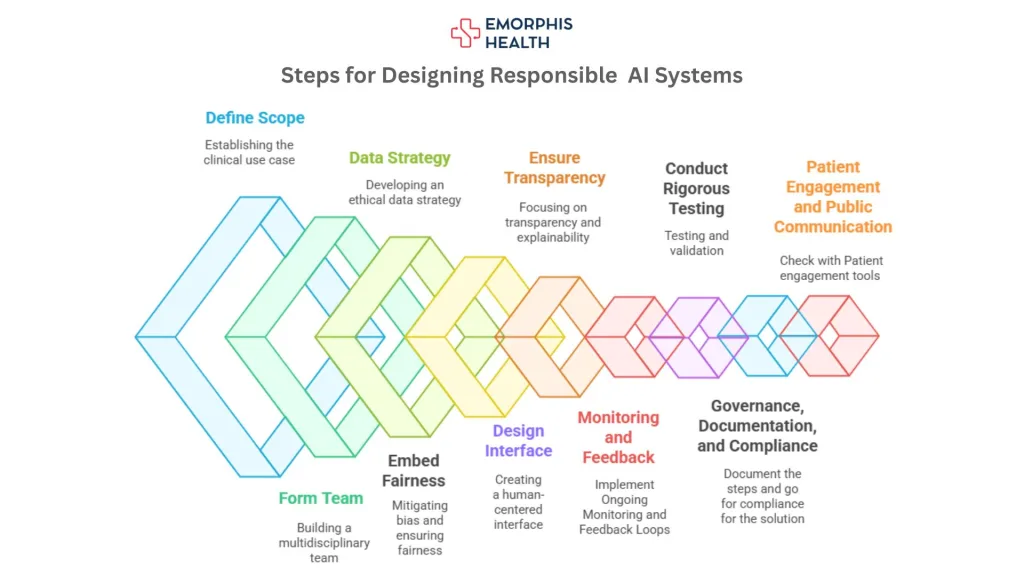

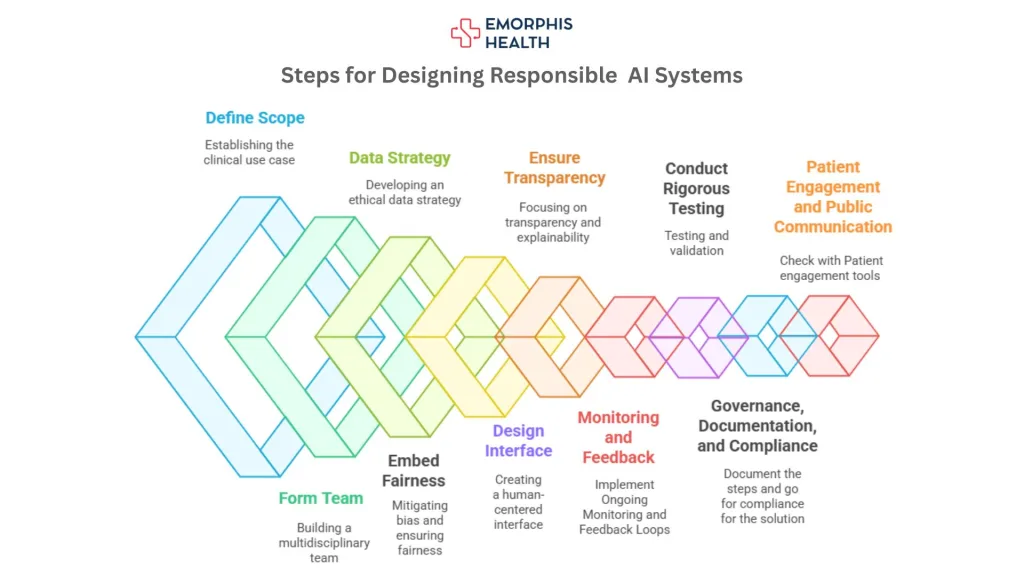

Designing Responsible AI Systems

Designing Responsible AI in Healthcare is a multifaceted process that requires not just technical excellence but also ethical foresight, stakeholder collaboration, and operational integration. Below is a structured approach to building AI systems that are safe, effective, and aligned with patient-centered values:

Step 1: Define the Scope and Clinical Use Case

- Begin with a clear clinical objective—whether it’s assisting in diagnostics, automating administrative workflows, or monitoring patient vitals.

- Ensure that the use case aligns with the goals of Responsible AI in Healthcare, such as reducing clinician burden, improving patient safety, or enabling equitable access.

Step 2: Form a Multidisciplinary Development Team

- Include AI engineers, data scientists, clinicians, ethicists, patient advocates, legal experts, and UX designers.

- This diverse team ensures that decisions are informed by both technical and human perspectives, a pillar of Responsible AI in Healthcare.

Step 3: Data Strategy with Ethics at the Core

- Ensure datasets are diverse, representative, and de-identified to reduce bias and protect privacy.

- Document data provenance, quality checks, and consent mechanisms.

- Adhere to data regulations like HIPAA, GDPR, and local governance standards.

Step 4: Embed Fairness and Bias Mitigation

- Continuously test the system on diverse demographic groups.

- Include bias detection metrics (e.g., equalized odds, demographic parity) in the development pipeline.

- Retrain models regularly to prevent performance degradation.

Step 5: Build for Transparency and Explainability

- Use interpretable models or integrate tools like SHAP, LIME, or attention mechanisms to show how decisions are made.

- Include an AI factsheet or dashboard that informs clinicians about the model’s training data, risk level, and confidence scores.

Step 6: Human-Centered Interface Design

- Develop interfaces that are clinician-friendly, intuitive, and non-intrusive.

- Include easy-to-read alerts, explainable suggestions, and a clear path to override AI decisions.

- Make Responsible AI in Healthcare an experience—not just a background process.

Step 7: Conduct Rigorous Testing and Validation

- Simulate real-world environments and clinical workflows using synthetic or retrospective data.

- Implement stress testing for edge cases, system downtime, and adversarial scenarios.

- Validate models in clinical trials or observational studies before full deployment.

Step 8: Implement Ongoing Monitoring and Feedback Loops

- Create a dashboard to track performance metrics in real-time (e.g., accuracy, false positives, downtime).

- Build feedback tools where clinicians can flag unusual outputs—feeding into model retraining processes.

- This step reinforces the core idea behind Responsible AI in Healthcare: continuous improvement through transparency and accountability.

Step 9: Governance, Documentation, and Compliance

- Maintain version control, audit trails, and documentation for every AI component.

- Set up internal AI ethics committees or governance boards.

- Ensure compliance with regulatory frameworks like FDA (USA), EU AI Act, MHRA (UK), etc.

Step 10: Patient Engagement and Public Communication

- Share with patients how AI is used in their care journey.

- Use simple language to explain benefits, limitations, and data usage.

- Transparency builds trust, which is a core value of Responsible AI in Healthcare.

Global and Local Governance

AI governance frameworks are evolving globally, aiming to balance innovation with safety:

- United States (FDA): AI/ML-based medical devices are subject to premarket clearance and ongoing updates through real-world performance monitoring.

- European Union (EU AI Act): Classifies medical AI as “high-risk” and mandates strict testing, transparency, and oversight before market entry.

- Canada and Australia: Regulators are updating policies to account for AI’s adaptive nature and the need for continuous validation.

- India’s National Digital Health Mission (NDHM): Introduces guidelines on ethical AI use in telehealth and public health systems.

Organizational Governance Models

- AI Ethics Committees: Healthcare institutions are forming internal review boards to evaluate the ethical implications of AI tools.

- Model Fact Sheets and AI Labels: Initiatives are emerging to inform clinicians and patients about AI capabilities, limitations, and data provenance—like “nutrition labels” for algorithms.

The Future of Responsible AI in Healthcare

The future of Responsible AI is not just technological—it’s human, inclusive, and collaborative:

- AI and Clinician Co-pilots: The next phase involves AI as an intelligent assistant rather than a substitute. Think of it as a “clinical co-pilot” providing insights while leaving the final call to physicians.

- Standardized Responsible AI Certifications: Expect to see global bodies offering certifications for ethical, explainable AI models.

- Interoperable AI Ecosystems: AI tools will seamlessly integrate with EHRs, patient portals, and digital therapeutics—ensuring a holistic patient experience.

- Democratization of AI: With low-code/no-code tools and open-source models, healthcare startups and local institutions will be empowered to create community-specific solutions.

- Responsible AI Education: Medical schools and hospitals will start training clinicians on how to critically evaluate and work with AI systems.

- Global AI Collaborations: Cross-border data collaborations will help train AI models that are globally applicable yet locally effective.

- Sustainability and Green AI: Energy-efficient AI systems will become a priority as healthcare embraces climate-conscious innovation.

Where We Stand Now

AI is no longer a futuristic concept in healthcare, it’s already reshaping diagnostics, care coordination, patient engagement, and operational efficiency. But with great innovation comes greater responsibility. Responsible AI in Healthcare is not just a checklist of ethical practices; it’s a living, breathing commitment to fairness, transparency, and patient-centricity.

At this turning point, healthcare providers, technologists, and policymakers must collaborate to ensure that AI tools are not only accurate and efficient but also safe, equitable, and trustworthy.

That’s exactly where Emorphis Health steps in.

We specialize in building AI-driven healthcare platforms that go beyond innovation—infusing every solution with ethics, compliance, and human-centered design. From remote patient monitoring to intelligent care management systems, our solutions are built to deliver real-world outcomes aligned with the principles of Responsible AI in Healthcare.

Why connect with our experts?

Because we don’t just talk about ethical AI—we build it. Whether you’re planning your next AI integration or optimizing an existing solution, our domain experts help you navigate the technical, regulatory, and ethical complexities with ease.

Let’s reimagine healthcare together—responsibly.