Multimodal AI in Healthcare, Transforming Diagnosis, Treatment, and Patient Care

See Contents

- 1 Multimodal AI in Healthcare, Transforming Diagnosis, Treatment, and Patient Care

- 2 What is Multimodal AI?

- 3 Applications of Multimodal AI in Healthcare

- 4 Benefits of Multimodal AI in Healthcare

- 5 Challenges and Solutions in Implementing Multimodal AI in Healthcare

- 6 The Future of Multimodal AI in Healthcare

- 7 Conclusion

Multimodal AI in healthcare is transforming how the medical industry uses data to improve diagnosis, treatment, patient monitoring, and operational efficiency. By simultaneously processing multiple types of data—textual, visual, auditory, sensor-based, and structured—multimodal AI creates a unified, intelligent understanding of a patient’s condition in real time. This enables clinicians to make better decisions, reduces the burden on healthcare staff, and personalizes care delivery.

This article explores the key concepts, applications, benefits, challenges, and the future of multimodal AI in healthcare.

To fully grasp its potential, let’s begin by understanding what multimodal AI is and how it works.

What is Multimodal AI?

Multimodal AI refers to artificial intelligence systems that can process, understand, and correlate multiple types of data—such as visual inputs (like X-rays), textual notes (like EHRs), audio (doctor-patient conversations), and more—simultaneously. Rather than relying on a single mode of input, multimodal AI combines different data types to extract richer context, make more informed decisions, and interact more naturally with users.

In healthcare, this means AI that can “see,” “read,” “listen,” and “reason” across a variety of clinical data formats, bringing together diverse information to support physicians and improve patient outcomes.

Sources are

- Text (EHRs, clinical notes, lab reports)

- Images (X-rays, MRIs, pathology slides)

- Audio (patient-clinician conversations)

- Video (surgical footage, patient gait analysis)

- Sensor data (wearables, remote monitors)

- Structured data (vitals, genomics, lab tests)

Now that we understand the core of multimodal AI, let’s explore how it is already making an impact in healthcare.

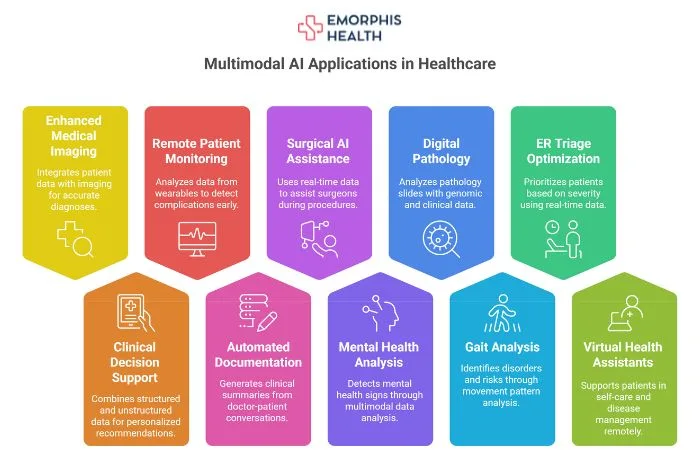

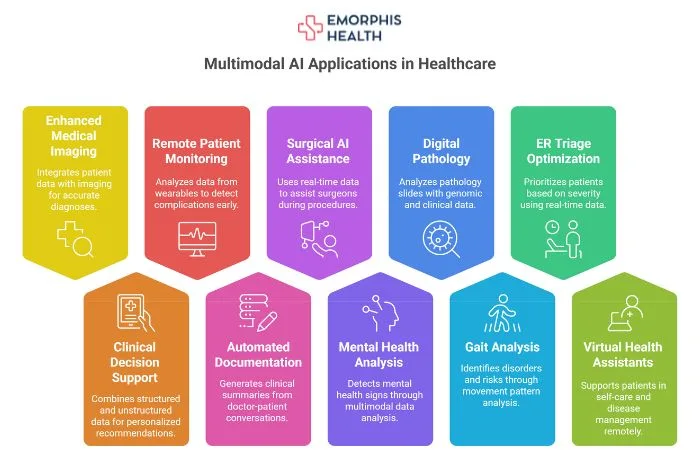

Applications of Multimodal AI in Healthcare

From radiology to remote monitoring, multimodal AI is reshaping healthcare delivery across disciplines. Below are some of the most promising applications:

A. Enhanced Medical Imaging Interpretation

Multimodal AI enhances radiologists’ capabilities by integrating patient history, clinical notes, and lab results with imaging data like MRIs and CT scans, leading to earlier and more accurate diagnoses.

As AI continues to support diagnosis, it also plays a crucial role in helping doctors make informed treatment decisions.

B. Clinical Decision Support Systems (CDSS)

By combining structured data (vitals, labs), unstructured data (notes, transcripts), and historical patterns, CDSS tools driven by multimodal AI offer clinicians highly personalized and evidence-backed recommendations for treatment.

Beyond decision support, multimodal AI is also proving essential in tracking patient health remotely.

C. Remote Patient Monitoring and Alerts

Smart wearables and IoT devices generate continuous streams of data—such as ECG, blood oxygen, and movement patterns. Multimodal AI analyzes these inputs in context with patient history to detect early signs of complications or disease progression.

Monitoring is just one piece of the puzzle. To support the doctor’s daily work, AI is now also automating time-consuming tasks.

D. Automated Documentation and Ambient Scribing

Ambient intelligence systems listen to doctor-patient conversations and, using NLP and audio processing, generate clinical summaries and SOAP notes. This reduces administrative burden and enhances documentation accuracy.

As AI supports efficiency in the clinic, it also lends precision and control in the operating room.

E. Surgical AI and Real-time Assistance

Surgical robots now use video, audio cues, and patient vitals in real-time to assist surgeons. Multimodal AI allows these systems to interpret complex inputs and respond dynamically during procedures.

In addition to physical health, AI is now addressing mental and behavioral health through multimodal cues.

F. Mental and Behavioral Health Analysis

By analyzing tone, speech patterns, facial micro-expressions, and language content, multimodal AI can detect signs of depression, anxiety, or early-stage Alzheimer’s more effectively than traditional questionnaires.

As diagnostics and monitoring evolve, so too do the tools for analyzing complex pathology data.

G. Digital Pathology

AI can now analyze pathology slides alongside genomic, demographic, and clinical data to offer improved cancer classification, treatment suggestions, and risk prediction—all through a multimodal lens.

Beyond pathology, movement analysis through video and sensor input is another powerful use case.

H. Gait and Movement Analysis

AI models that process movement patterns captured via video or accelerometers can identify neurological disorders, fall risk, or therapy effectiveness in patients recovering from injury or living with chronic conditions.

Efficiency also extends to emergency care—where quick, accurate triage can save lives.

I. Emergency Room (ER) Triage Optimization

Multimodal AI analyzes intake notes, sensor vitals, and historical records in real time to help ER staff prioritize patients based on severity and condition, improving time-to-care and outcomes.

Outside the hospital, virtual assistants powered by AI continue to improve the patient experience.

J. Virtual Health Assistants

These AI tools combine voice, language, facial recognition, and medical data to support patients in self-care, medication adherence, and chronic disease management—especially for remote or elderly populations.

With so many use cases, it’s clear that multimodal AI offers wide-ranging benefits. Let’s now examine these advantages in detail.

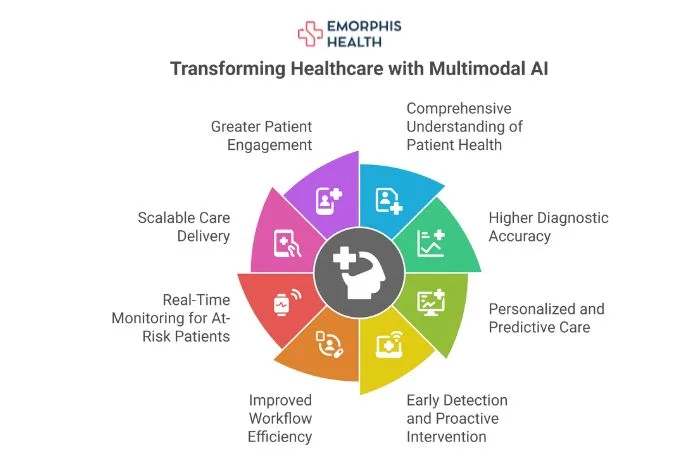

Benefits of Multimodal AI in Healthcare

1. Comprehensive Understanding of Patient Health

By correlating multiple data types, multimodal AI delivers a complete picture of a patient’s condition, enabling early detection and better treatment planning.

This comprehensive approach naturally leads to more accurate and timely diagnoses.

2. Higher Diagnostic Accuracy

When different inputs agree—such as radiology images and lab values—the system’s confidence improves, reducing false positives or missed diagnoses.

With a strong foundation in accuracy, AI can now focus on tailoring care to the individual.

3. Personalized and Predictive Care

Multimodal AI helps forecast disease progression by analyzing a patient’s lifestyle, genetics, and medical history, allowing clinicians to offer tailored interventions and anticipate complications.

Prediction also plays a vital role in critical care and high-risk patient management.

4. Early Detection and Proactive Intervention

By monitoring subtle changes across modalities, AI can detect deteriorating conditions—like sepsis or stroke—before they become life-threatening, enabling proactive care.

Automation is another important area of improvement.

5. Improved Workflow Efficiency

Physicians can reduce time spent on repetitive tasks like note-taking, data retrieval, or report generation, leading to faster clinical decisions and reduced burnout.

Efficiency improvements go hand-in-hand with better safety and monitoring.

6. Real-Time Monitoring for At-Risk Patients

Multimodal AI constantly monitors vitals, sensor data, and historical records to detect red flags in high-risk patients, ensuring timely alerts and interventions.

This also makes healthcare more scalable and accessible.

7. Scalable Care Delivery

By automating key aspects of diagnosis, monitoring, and patient engagement, multimodal AI enables health systems to serve more patients—especially in remote or underserved regions—without increasing staff load.

Finally, all these benefits converge to offer more engaged and empowered patients.

8. Greater Patient Engagement

Multimodal virtual assistants interact naturally using language, emotion detection, and biometric inputs, fostering trust and encouraging patients to follow through on care plans.

Despite these advantages, adopting multimodal AI does come with challenges. Let’s address them next.

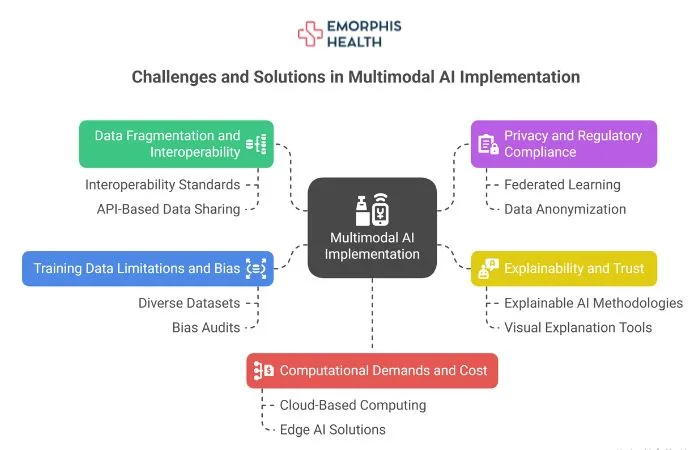

Challenges and Solutions in Implementing Multimodal AI in Healthcare

While multimodal AI holds tremendous potential to revolutionize healthcare, its successful deployment involves navigating several complex challenges. These span across technical, ethical, and regulatory domains. Below is a detailed explanation of each challenge followed by practical solutions.

a. Data Fragmentation and Interoperability

The Challenge:

Healthcare data is often distributed across multiple systems and formats—such as electronic health records (EHRs), imaging platforms, lab systems, and wearable devices. These systems are frequently incompatible or lack standardization, making it difficult to unify data for AI processing. This fragmentation hampers the ability of multimodal AI models to access and interpret data holistically.

The Solution:

To address this, healthcare providers and developers should adopt interoperability standards such as FHIR (Fast Healthcare Interoperability Resources) to ensure consistent data exchange. Additionally, API-based data sharing and middleware platforms can be used to integrate disparate data sources and formats into a unified ecosystem suitable for multimodal AI applications.

b. Privacy and Regulatory Compliance

The Challenge:

Multimodal AI systems process sensitive and personal health information, including medical histories, diagnostic images, and voice recordings. This raises serious concerns about data security, patient consent, and compliance with regulations like HIPAA, GDPR, and other local laws. Any breach can result in legal penalties and loss of trust.

The Solution:

Organizations should implement federated learning techniques that allow AI to be trained without moving data outside secure environments. Data anonymization, encryption, and role-based access control further enhance data protection. Collaborating with legal and compliance teams from the start of the AI lifecycle ensures adherence to all relevant regulatory frameworks.

c. Explainability and Trust

The Challenge:

AI systems—especially complex multimodal ones—are often viewed as “black boxes” by clinicians. Without clear explanations for how a model arrived at a decision, clinicians may hesitate to rely on its recommendations, particularly in high-stakes scenarios like surgery or cancer treatment.

The Solution:

Adopting explainable AI (XAI) methodologies can help improve transparency. For instance, visual explanation tools such as saliency maps can show which parts of an image influenced a diagnosis. Providing confidence scores, decision summaries, and visual dashboards alongside AI output builds user trust and supports responsible clinical adoption.

d. Training Data Limitations and Bias

The Challenge:

AI models are only as good as the data they are trained on. If the training data lacks diversity in terms of age, gender, ethnicity, or socioeconomic background, the model may exhibit biased performance. This can result in poor outcomes for underrepresented populations and widen health disparities.

The Solution:

Developers should ensure the use of diverse and inclusive datasets during model training. Regular bias audits and performance evaluations across different demographic groups are essential. Synthetic data generation and data augmentation techniques can also be employed to fill gaps and ensure fairness and generalization in real-world applications.

e. Computational Demands and Cost

The Challenge:

Processing and analyzing multimodal data in real time requires significant computational resources. High-performance GPUs, data storage, and network infrastructure can be expensive, especially for small or resource-constrained healthcare facilities. This can hinder scalability and limit access to advanced AI tools.

The Solution:

To overcome these limitations, organizations can leverage cloud-based computing platforms that offer scalable infrastructure on demand. Edge AI solutions—where processing happens locally on devices—can reduce latency and operational costs. Additionally, employing model optimization techniques such as pruning, quantization, and model distillation can improve performance while reducing computational load.

Despite these challenges, the continued advancements in AI research, data infrastructure, and regulatory alignment are steadily addressing many of the current limitations. As adoption grows and systems become more robust, the focus is now shifting toward what lies ahead.

The future of multimodal AI in healthcare promises not only smarter diagnostics and personalized treatments, but also a complete transformation in how care is delivered, monitored, and experienced.

Let’s explore the emerging innovations and trends that will define the next chapter of this technology.

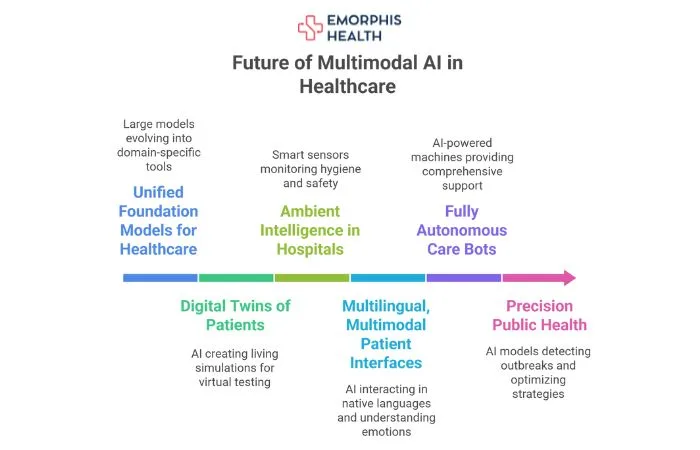

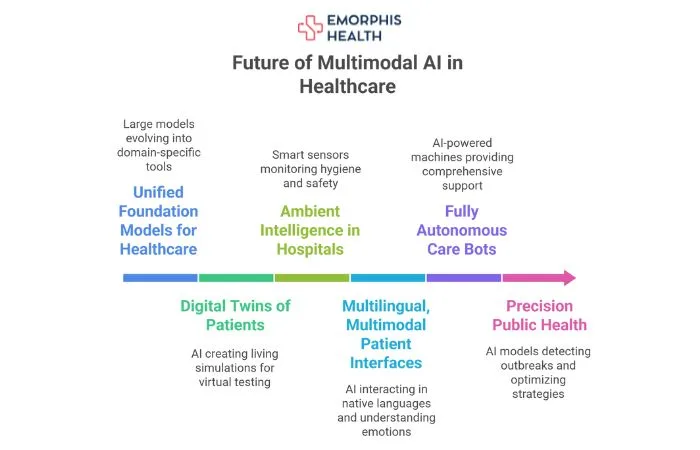

The Future of Multimodal AI in Healthcare

With emerging advances in AI and computing, the future of healthcare will be increasingly intelligent, connected, and proactive. Here’s what we can expect:

1. Unified Foundation Models for Healthcare

Large models like Med-PaLM and GPT-4 are evolving into domain-specific, multimodal tools that will power universal healthcare assistants.

2. Digital Twins of Patients

AI will help create living simulations of patients using their medical, genomic, and lifestyle data, allowing doctors to test treatments virtually before applying them.

3. Ambient Intelligence in Hospitals

Hospitals will be equipped with smart sensors and AI that can monitor hygiene compliance, patient movement, and safety without any manual input.

4. Multilingual, Multimodal Patient Interfaces

Voice and vision-enabled AI will interact with patients in their native languages and understand emotional cues, making digital health more inclusive.

5. Fully Autonomous Care Bots

These AI-powered machines will combine facial recognition, voice response, and biometrics to provide physical, emotional, and medical support to patients at home.

6. Precision Public Health

Population-wide AI models will use multimodal inputs to detect disease outbreaks, track health trends, and optimize public healthcare strategies in real time.

Conclusion

Multimodal AI in healthcare is more than a technological breakthrough—it’s a paradigm shift. By unifying diverse data sources, AI becomes not just a tool, but a partner in delivering precision care, enhancing clinical workflows, and ultimately saving lives.

As hospitals, AI developers, and healthcare providers embrace this change, the focus must remain on trust, transparency, inclusivity, and real-world impact. In the coming years, multimodal AI will be at the heart of truly intelligent, compassionate, and efficient healthcare systems.