The Wake-Up Call: Healthcare is the Next AI Gold Rush

See Contents

- 1 The Wake-Up Call: Healthcare is the Next AI Gold Rush

- 2 AI Adoption in Healthcare: Where We Stand

- 3 Have a Product, But Not Sure How to Make It AI-Powered? Here’s How

- 4 Tech Stack Breakdown: What You Need Under the Hood

- 5 Compliance Built-In: The Rules You Can’t Ignore

- 6 Avoid These Common Mistakes in AI Healthcare Development

- 7 1. Using Non-Representative or Poor-Quality Data

- 8 2. Skipping Clinician Collaboration from Day One

- 9 3. Prioritizing Model Accuracy Over Clinical Usability

- 10 4. Underestimating Compliance and Regulatory Hurdles

- 11 5. No Feedback Loop to Improve the AI Over Time

The healthcare industry is undergoing a seismic transformation. Aging populations, rising chronic illnesses, clinician shortages, and skyrocketing operational costs are driving the demand for smarter, faster, and more scalable solutions. At the heart of this transformation is artificial intelligence (AI), and software development companies are perfectly positioned to lead the charge, if they act now.

Building traditional software for healthcare is no longer enough. What the market demands today are intelligent, responsive systems that not only automate processes but also provide data-driven insights in real time. To stay competitive, software firms must develop AI software that can tackle healthcare’s biggest challenges, from diagnostic support and clinical documentation to predictive analytics and patient triage.

Market research shows that AI in healthcare is projected to grow from $15 billion in 2023 to over $100 billion by 2030. This explosive growth isn’t just hype; it’s fueled by real adoption. Hospitals, insurance providers, pharmaceutical companies, and digital health startups are actively seeking partners that can deliver AI-enabled applications. For software companies that don’t evolve, this means watching contracts go to competitors who can offer AI as a core feature.

To seize this opportunity, development firms need to understand that integrating AI isn’t just a tech upgrade; it’s a strategic pivot. By learning how to develop AI software for healthcare, your team can transform existing products into cutting-edge platforms that deliver clinical and operational value. Those who start now will have a massive advantage in client acquisition, funding opportunities, and long-term scalability.

AI Adoption in Healthcare: Where We Stand

AI is rapidly becoming a strategic priority in healthcare, with organizations accelerating investment in Generative AI to enhance clinical decisions, reduce operational burden, and boost productivity.

According to an article, The Healthcare AI Adoption Index, published by Bessemer Venture Partners, over 80% of healthcare leaders believe AI will significantly influence clinical workflows and cost structures within the next three to five years.

While enthusiasm is strong, the industry is still early in execution. Only about half of organizations have a defined AI strategy, yet more than 50% are already seeing measurable ROI in their first year of GenAI deployment. Providers lead in pilot deployments, especially for AI-powered ambient scribes that reduce the administrative load from EHRs.

Many AI initiatives remain in the ideation or proof-of-concept phase, highlighting a clear opportunity for companies to develop AI software that meets real clinical needs. For software vendors and digital health innovators, now is the time to shape next-generation software for healthcare that’s built to deliver results from day one.

With AI adoption accelerating and early movers already seeing tangible results, the question isn’t if you should integrate AI; it’s how soon. If you already have a healthcare software product but aren’t sure how to infuse it with AI capabilities, you’re not alone. That’s where the real opportunity begins.

Have a Product, But Not Sure How to Make It AI-Powered? Here’s How

You’ve built software for healthcare that works, maybe a telemedicine platform, patient portal, EMR, or remote monitoring solution. But now clients are asking, “Does this use AI?” If you’re unsure how to take your product to the next level, here’s a step-by-step path to develop AI software by adding intelligence to your existing platform.

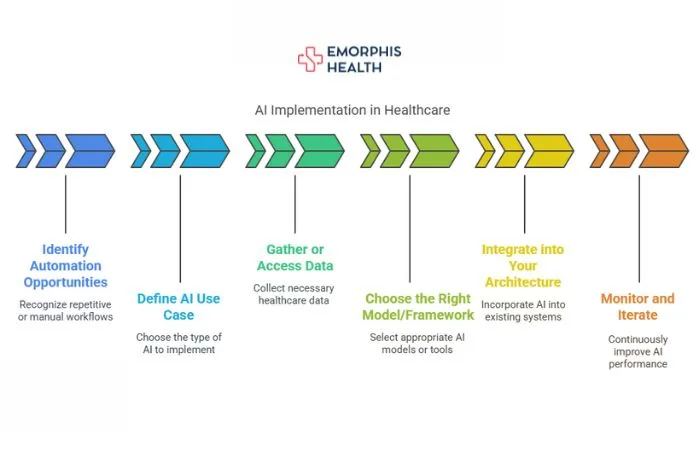

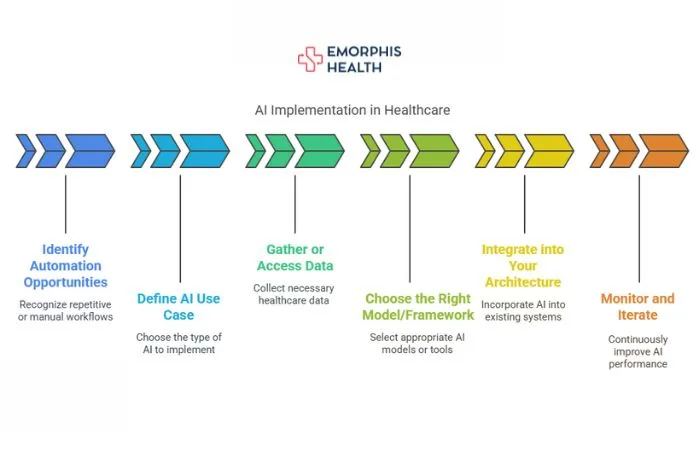

Step-by-Step to AI-Power Your Healthcare Software

Step 1: Identify Automation Opportunities

Find repetitive, manual, or insight-driven workflows. For example:

- Appointment scheduling

- Clinical form processing

- Radiology image interpretation

- Patient symptom triage

Step 2: Define the AI Use Case

Choose whether you’re implementing:

- NLP (Natural Language Processing)

- Predictive analytics

- Image recognition

- Chatbots or agents

This determines the kind of models you’ll use.

Step 3: Gather or Access Data

To develop AI software, you need healthcare data. Use:

- Your system’s historical data (if authorized)

- Public datasets (e.g., MIMIC, NIH Chest X-rays)

- Synthetic data (to simulate patient records safely)

Ensure data is anonymized and compliant.

Step 4: Choose the Right Model/Framework

Select pre-trained AI models or train custom ones based on your data. Use tools like:

- GPT/Claude for chat and summarization

- Scikit-learn or XGBoost for structured predictions

- MONAI for imaging

Step 5: Integrate into Your Architecture

Use microservices or APIs to add AI without breaking your current system. Examples:

- Add a smart triage chatbot

- Use an API for summarizing doctor notes

- Trigger AI-based alerts for abnormal vitals

Step 6: Monitor and Iterate

Include logging, feedback loops, and retraining to improve performance over time.

By following these steps, any existing software for healthcare can be upgraded. When you develop AI software, even in small increments, the business impact and user experience multiply exponentially.

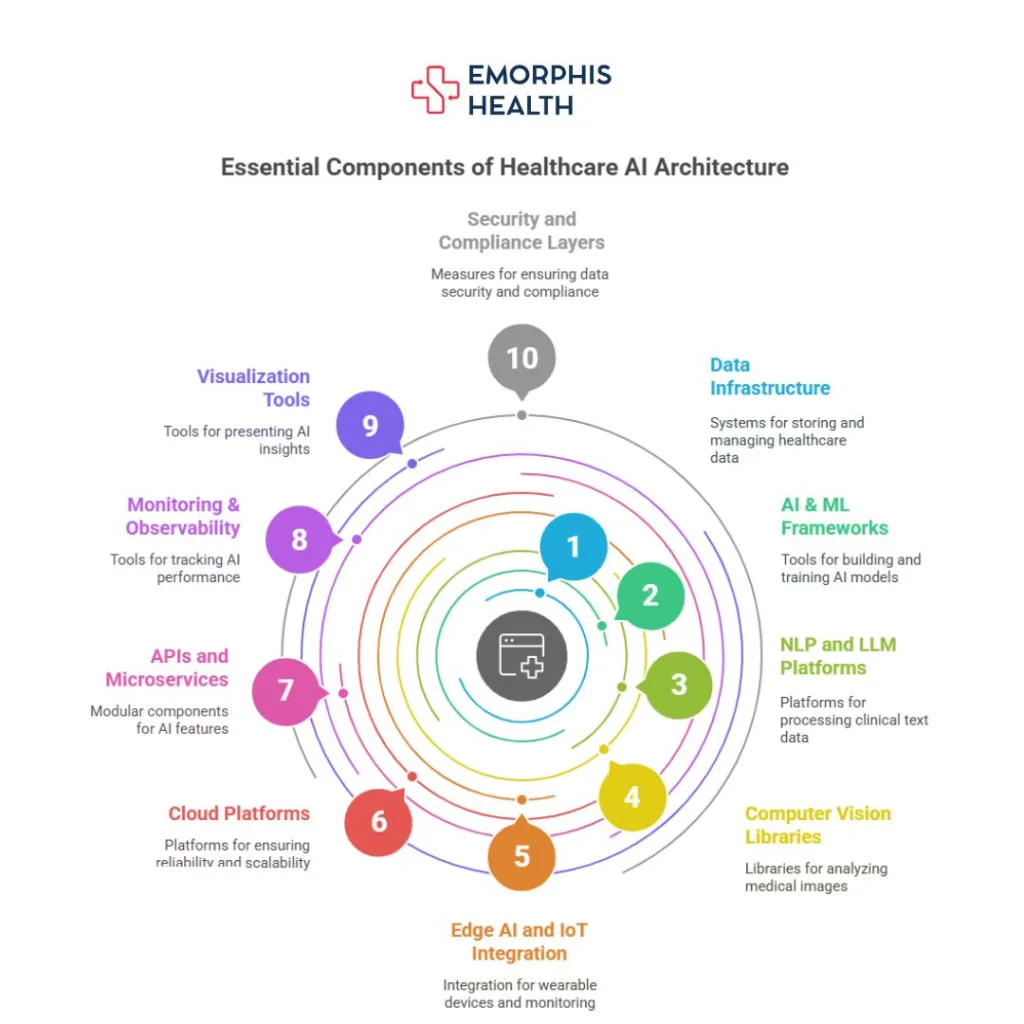

Tech Stack Breakdown: What You Need Under the Hood

To develop AI software for healthcare, your tech stack must balance innovation with privacy, scalability, and clinical-grade accuracy. Here’s a breakdown of the most essential components in a modern healthcare AI architecture.

1. Data Infrastructure

To develop AI software, start with structured, secure, and accessible data systems. Use:

- Data Lakes for storing large volumes (e.g., Amazon S3)

- ETL Pipelines to clean and format data

- FHIR APIs for EHR integration

This layer is the foundation of any effective software for a healthcare AI system.

2. AI & ML Frameworks

For model building and training, use:

- TensorFlow: Good for production-grade models

- PyTorch: Flexible and research-friendly

- ONNX: Interchange between frameworks

These frameworks help you develop, train, and scale AI models efficiently.

3. NLP and LLM Platforms

To work with clinical text data:

- OpenAI GPT / Azure OpenAI

- Google Med-PaLM

- BioBERT / ClinicalBERT

These enhance note-taking, summarization, chatbots, and automated documentation in your software for healthcare.

4. Computer Vision Libraries

For medical imaging tasks:

- MONAI (Medical Open Network for AI)

- OpenCV + DICOM

Develop AI software that can analyze X-rays, MRIs, CT scans, and more.

5. Edge AI and IoT Integration

For wearable devices and bedside monitoring:

- Use NVIDIA Jetson for edge deployment

- TensorFlow Lite or ONNX Runtime

These allow AI to run locally on medical devices with low latency.

6. Cloud Platforms

To ensure reliability, compliance, and scalability:

- AWS HealthLake

- Azure Health Data Services

- Google Cloud Healthcare API

These platforms offer built-in healthcare data security and global scaling.

7. APIs and Microservices

AI features should be modular:

- REST APIs or GraphQL for communication

- Containers (Docker) and Orchestration (Kubernetes)

This keeps AI manageable and extensible across software for healthcare solutions.

8. Monitoring & Observability

Use tools like Prometheus, ELK Stack, or Datadog to track:

- Model drift

- Inference latency

- Errors

9. Visualization Tools

For reports and AI output:

-

Tableau, Power BI, or Plotly Dash

Present complex AI insights clearly to clinicians or administrators.

10. Security and Compliance Layers

Essential for healthcare trust:

- End-to-end encryption

- Identity and Access Management (IAM)

- Blockchain or audit logs

A robust tech stack ensures your team can develop AI software that performs, complies, and scales.

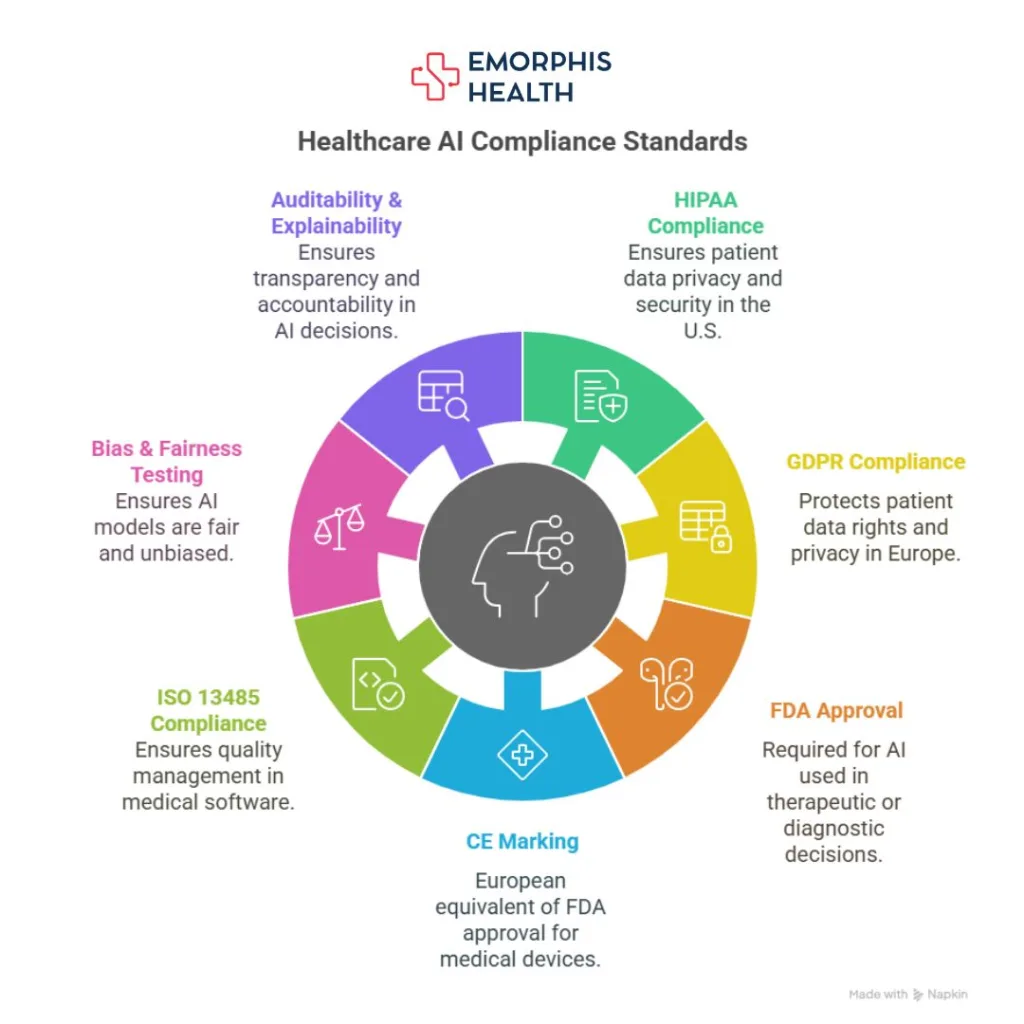

Compliance Built-In: The Rules You Can’t Ignore

When you develop AI software for healthcare, compliance isn’t optional; it’s mission-critical. Here’s a detailed breakdown of the key compliance standards you must meet, depending on your region and application type.

1. HIPAA (Health Insurance Portability and Accountability Act)

Required for all software for healthcare in the U.S. that deals with patient data.

- Encrypt all patient health info (PHI)

- Ensure role-based access control

- Maintain secure data backups and logs

2. GDPR (General Data Protection Regulation)

Applies to software used in Europe:

- Explicit patient consent for data collection

- Data minimization and portability

- “Right to be forgotten” compliance

3. FDA Approval

If your AI makes therapeutic or diagnostic decisions, it’s a “software as a medical device (SaMD)”:

- Submit clinical evaluation and risk analysis

- FDA may require studies for approval

4. CE Marking

European equivalent of FDA:

- ISO standards and medical device directive (MDD/MDR) compliance

- Clinical risk classification and conformity assessment

5. ISO 13485

Quality management standard for medical software:

- Applies when you manufacture or maintain regulated software for healthcare

- Covers documentation, traceability, and testing

6. Bias & Fairness Testing

AI models must be fair and unbiased:

- Test against diverse datasets

- Evaluate demographic performance disparities

- Required for ethical AI compliance in some markets

7. Auditability & Explainability

To develop AI software that’s transparent:

- Maintain logs of all AI decisions

- Provide model confidence scores

- Enable manual override options for clinicians

Ignoring any of these can result in delays, fines, or product rejection. Build compliance into your development lifecycle, not just your launch checklist.

Find more details on understanding healthcare compliance.

Avoid These Common Mistakes in AI Healthcare Development

When companies begin to develop AI software for healthcare, they often encounter predictable challenges that can lead to wasted time, lost credibility, or unusable products. Here’s a list of the most common mistakes, along with how to avoid them.

1. Using Non-Representative or Poor-Quality Data

Why It Happens:

Teams often use:

- Public datasets that aren’t diverse (e.g., datasets skewed to a single gender, ethnicity, or geography)

- Data scraped from unrelated domains

- Synthetic or simulated data without proper validation

What Goes Wrong:

- AI models perform well in testing but fail on real-world clinical data

- Results are biased, leading to inaccurate diagnostics for underrepresented groups

- Regulatory bodies (FDA, CE) may reject your model for a lack of generalizability

How to Avoid It:

- Use real-world clinical datasets that reflect your target user population

- Clean and normalize data consistently

- Test AI models across multiple patient subgroups to surface bias early

Emorphis Pro Tip: Partner with hospitals or use federated learning to safely train models without transferring patient data.

2. Skipping Clinician Collaboration from Day One

Why It Happens:

Many software teams rely solely on technical stakeholders or product managers to define AI functionality.

What Goes Wrong:

- You solve the wrong problem—an AI tool that doesn’t fit into the provider’s workflow

- Lack of clinical trust and adoption

- Medical terms and nuances are misunderstood or oversimplified

How to Avoid It:

- Involve clinicians during ideation, model design, testing, and iteration

- Host regular validation sessions with physicians and nurses

- Translate user pain points into AI use cases—not the other way around

Emorphis Pro Tip: Appoint a Chief Medical AI Advisor or an advisory board for long-term input.

3. Prioritizing Model Accuracy Over Clinical Usability

Why It Happens:

Teams get excited about high F1 scores, ROC-AUC curves, and top-k accuracy without thinking about the user context.

What Goes Wrong:

- Highly accurate models are difficult to use, understand, or trust

- Outputs lack interpretability, creating legal and ethical risks

- Clinicians may ignore or override AI suggestions, even when correct

How to Avoid It:

- Balance performance metrics with explainability and UX

- Integrate AI outputs into interfaces clinicians already use (EHRs, dashboards)

- Use SHAP or LIME to explain decisions in plain language

Emorphis Pro Tip: Ask clinicians, “Would you act on this AI recommendation with the available explanation?”

4. Underestimating Compliance and Regulatory Hurdles

Why It Happens:

AI teams often move fast and treat compliance as a post-launch checklist item, not part of product design.

What Goes Wrong:

- You launch a product that violates HIPAA or GDPR

- Your AI recommendations are interpreted as “diagnosis,” triggering FDA scrutiny

- Audit logs, consent handling, and data storage fall short of legal standards

How to Avoid It:

- Design your AI software for HIPAA, GDPR, and FDA compliance from the start

- Include explainability, auditability, and access control in every release

- Build a “compliance-by-design” architecture and document everything

Emorphis Pro Tip: Use third-party healthcare legal counsel to review your architecture before scale-up.

5. No Feedback Loop to Improve the AI Over Time

Why It Happens:

Teams build static models and assume the job is done once deployed.

What Goes Wrong:

- Model performance decays over time due to concept drift or new clinical practices

- User feedback is ignored, leading to repeated poor experiences

- The AI becomes obsolete while the software remains “live.”

How to Avoid It:

- Set up real-time feedback capture from users (e.g., thumbs up/down, correction inputs)

- Create pipelines to retrain models periodically with fresh, anonymized data

- Monitor prediction accuracy and drift continuously

Emorphis Pro Tip: Treat every AI deployment as a living system, not a one-time release.

Get more details on Responsible AI in Healthcare.

Act Now or Fall Behind: The 2025–2030 Healthcare AI Timeline

The window of opportunity to develop AI software for healthcare is open now—but it won’t stay that way forever. Within the next 3–5 years, AI capabilities will become a baseline expectation in all enterprise-grade healthcare software. Hospitals, payers, and pharma companies will no longer entertain vendors that don’t offer AI-enabled features.

Here’s what’s next:

- Autonomous clinical agents: AI assistants that manage workflows end-to-end.

- Personalized treatment platforms: Adaptive care pathways driven by real-time data.

- Multi-modal diagnostics: AI that processes images, lab results, and doctor notes together.

If you’re a software company, there’s still time to develop AI software that becomes the foundation for tomorrow’s healthcare. But the time to start is now.

Conclusion: Build With Intelligence or Be Replaced

AI is not an optional feature in the future of healthcare; it’s the foundation. As a software development company, if you’re not exploring ways to develop AI software for healthcare, you’re not just behind—you’re at risk of being replaced by more forward-thinking firms.

With the right approach, tools, and partners, your existing software for healthcare can evolve into a powerful, AI-driven product that meets the needs of modern providers, improves patient outcomes, and unlocks significant revenue potential.

Don’t wait for the disruption. Be the disruptor.

Connect with us to check out our white-label products and solutions, and various case studies on Healthcare AI development.